5 things AI Can’t Do

AI is always confident… but not always the best tool for the job!

I was grading written work for my brand new French 1 students. The assignment: Write 3 sentences describing your favorite celebrity. As I read through simple student sentences like “Il est beau” and “Elle est une chanteuse excéllente” I came across this little gem:

Je m'appelle Hailey et le nom de ma célébrité est Bob l'éponge. Bob l'éponge est mon dessin animé préféré car il est drôle, mignon et s'en tire avec toutes sortes de manigances. Je pense que tout le monde devrait le regarder. Il a aussi 43 ans.

“How does a beginner gain the expertise to write her own content? Only through experience. There’s no shortcut.”

(My name is Hailey and the name of my celebrity is Spongebob. Spongebob is my favorite cartoon, for he is funny, cute, and gets away with all kinds of shenanigans. I think everyone ought to watch him. Also, he’s 43 years old.).

This student didn’t even erase the grey highlighting from her translation program! But even without that unwitting disclosure, the complicated sentences, grammatical complexity, and advanced vocabulary are a dead giveaway.

This isn’t student work – this is the work of (dum, dum DUMMMMM) - AI!!

The use of AI in this instance is so evident, it got me thinking… AI really does have some significant limitations. And so, I present to you (in no particular order)

5 things AI Can’t Do

1. AI can’t replicate authentic human voice.

Yes, AI can write a convincing essay. What it can’t do is imitate the uniqueness, creativity, and learning process intrinsic to human communication. I’ve written about this elsewhere; when I asked AI to write me student work with errors it did so – but many of the errors were not mistakes a real student would make. At the other end of the learning spectrum, AI may be able to generate all kinds of technical content – but for any kind of sensitive, emotionally charged, humorous, or creative content a human will need to refine that draft. Society will continue to need artistic and innovative human thought.

2. AI can’t help you master a skill set.

Related to the above, AI can produce some polished content. However, I don’t expect polished content from my 8th grade students! I’m not interested in their final product so much as I am in their growth and development as writers and thinkers. When a student brings me native-speaker level work, they’re cheating themselves of the opportunity to ever produce that level work on their own. Uninspired writing is and expected part of their development, and AI can’t get them there.

“AI is pretty smart, but it’s still not as smart as I am. What a relief! ”

Similarly, I had ChatGPT write me some ACTFL-style Can-Do statements (read them here). It did OK, and even gave me a couple of ideas I hadn’t thought of, but I generally liked the ones I wrote better. However, I have 20+ years of experience behind my work that allowed me to write good goals. How does a beginning teacher develop the expertise to write her own student objectives? Only through experience. There’s no shortcut.

3. AI Can’t Evaluate its Own Work.

Right now, AI is doing a pretty good job of generating content that looks human work. What it’s actually doing is imitating content that was, in fact, written by a human. However, as time goes on, more and more of the content out there will be AI (not human) created, and the preliminary data suggests this will result in increasingly bizarre writing from the AI. As the data is replicated again and again, it becomes corrupted to the point the whole thing becomes garbage.

Recycled data results in “Hapsburg AI”

Data research Jathan Sadowski compares this effect to the biological impact of inbreeding on DNA; “Habsburg AI – a system that is so heavily trained on the outputs of other generative AI's that it becomes an inbred mutant, likely with exaggerated, grotesque features.” (source)

In other words, garbage in, garbage out.

4. AI Can’t generate truly creative or unique content

AI can quickly scour the entire internet and synthesize a neat answer in the packaging you request. This all has the appearance of being very innovative… but as you spend time with it, you realize it’s actually not. AI can produce impressive texts, but its work is also formulaic. While this is incredibly helpful in many situations, it can’t replace actual human innovation.

YouTuber Tom Scott illustrates this principal by asking ChatGPT to generate clickable YouTube video titles in the style of different content creators. The AI responded with several ‘new’ content categories:

Grammatically Correct but Uninteresting Video Titles

* A Beach Where You Can Hear the Sea

* I paid People to do Weird Things on Camera

Boring Challenge Video Titles

* How to Watch the News Without Being Horrified

* The No Water Makeup Challenge

Nonsense Video Titles

* The British Road that is also a Boat

* Lemon Lime Lightening – 2 MILLION VOLTS

Titles That Sound Great but are Not Based on Reality

* The Long Forgotten History of the British Moon Landings

* How to Build a 3D Printed Plasma Speaker

Each of these *sounds* like a real title, but isn’t something that would actually make a viable YouTube video that people would be interested in watching. In other words, ChatGPT could replicate content from examples, but ultimately the generation of usable content takes human judgment.

Watch and judge for yourself:

Tom Scott asks AI to generate video titles

“Society will continue to need artistic, creative, and innovative human thought.”

My techie son and I had quite the discussion about this; he says you could make this argument about anything in life. Music, for example, draws inspiration from prior artists, and there is a finite number of available musical combinations available, so theoretically ‘all the music’ could someday be generated. At what point is the musician generating something new, and at what point is he simply repackaging something that’s been done in the past?

The answer is impossible to quantify; it takes expert judgment to evaluate such questions. And so, at least for now, there has to be a sentient human the beginning and at the end of AI processes.

5. Chat GPT can’t separate Truth from Fiction – at least, not consistently!

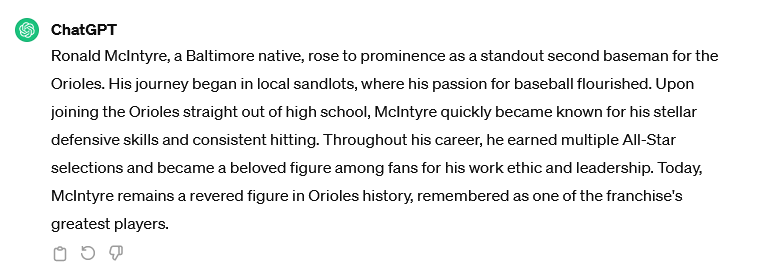

In fall of 2023 when I began drafting this blog post, I asked Chat GPT to give me a brief biography of Ronald McIntyre, the great second baseman from the Baltimore Orioles. It wrote me a beautiful 5 paragraph essay. Here’s an exceprt:

Well done, right? The only problem? Ronald McIntyre was a figment of my imagination.

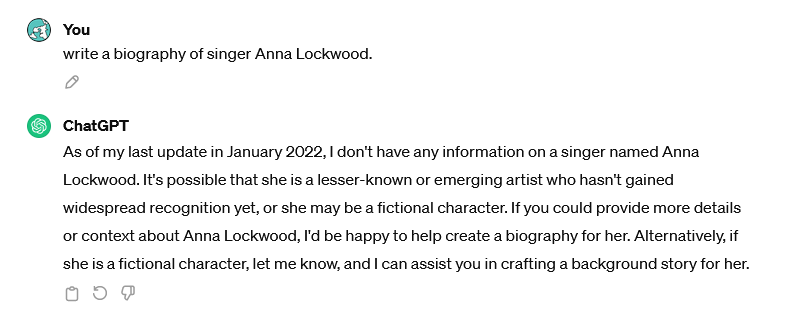

Since then, Chat GPT has improved… but it’s still inconsistent. Before posting this blog in April 2024, I’ve put in several similar prompts for imaginary celebrities. Sometimes I get a wonderful (but totally imaginary) essay; other times I get a message like this one:

In my view, this tendency to present fiction as fact is one of AI’s biggest limitations, and I’m not alone. Open AI CEO Sam Altman cautioned, “I probably trust the answers that come out of ChatGPT the least of anybody on Earth.” source

Clearly developers are aware of the issue, but as of this posting it has yet to be resolved.

User, beware.

Some Good News

OK, so the good news is, AI is pretty smart, but it’s still not as smart as I am. What a relief!

Next week I’ll share part 2 of this post – 6 more things AI can’t do. In the mean time, scroll to the footer and sign up for our newsletter so you will never miss a blog post!

Have you seen any AI fails? Share in the comments!